Cracking the Code: A Beginner’s Guide to the Metaverse, VR Hardware, and a Future Built with Light

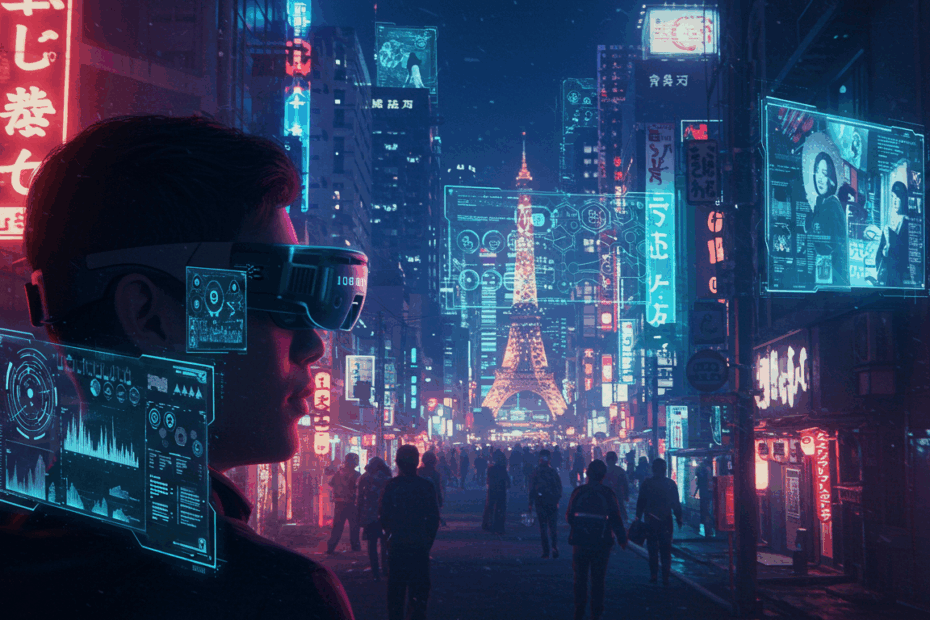

John: Welcome, everyone. For today’s deep dive, we’re stepping through the digital looking glass. The term “Metaverse” is thrown around a lot, often conjuring images of sci-fi blockbusters. But the reality is more nuanced, and it’s being built right now, piece by piece. We’re going to demystify it by focusing on three foundational pillars: the hardware that gets you in, the technology that maps our world to the virtual, and the digital destinations themselves.

Lila: That sounds like the perfect place to start, John. I think a lot of people, myself included, hear “Metaverse” and either picture a video game or just get completely lost. So, we’re talking about the gear, the bridge between our world and the digital one, and the virtual spaces we can visit? A gateway, a bridge, and a destination. I like that.

John: Exactly. Let’s start with the gateway: Virtual Reality, or VR, hardware. This is the most tangible part of the equation for most people. It’s not just a screen you look at; it’s a device designed to trick your senses into believing you’re somewhere else entirely. This feeling is what we call immersion.

Basic Info: What Are We Even Talking About?

Lila: Okay, so when we say “VR hardware,” most people probably think of the Meta Quest headsets. Are they all pretty much the same, just big goggles that strap to your head?

John: That’s a great starting point, as the Meta Quest line really brought VR to the mainstream. But the hardware landscape is surprisingly diverse. At a high level, we can categorize them into three main families:

- Standalone VR: This is the category the Meta Quest 3 and the upcoming Apple Vision Pro fall into. All the processing power, batteries, storage, and tracking sensors are built directly into the headset. There are no wires, no external computers needed. This freedom of movement makes them incredibly accessible.

- PC-VR: These are headsets like the Valve Index or the Varjo Aero. They tether to a powerful gaming PC. The headset itself is primarily a high-end display and tracking device, while the computer does all the heavy lifting—rendering incredibly detailed graphics and complex physics. This is for the high-fidelity, top-tier experience.

- Console-VR: The prime example here is Sony’s PlayStation VR2 (PSVR2), which connects exclusively to a PlayStation 5 console. It’s a middle ground, offering a more powerful experience than most standalone headsets but without the complexity and cost of a high-end PC rig.

Lila: So, it’s a trade-off between convenience, power, and cost. Standalone is for everyone, PC-VR is for the enthusiasts with deep pockets, and Console-VR is for the existing PlayStation gamers. What actually makes one headset feel more “real” than another? What are the key specs to look for?

John: That’s the million-dollar question. It comes down to a handful of core technologies working in concert. We’re talking about the display resolution (how many pixels are in front of each eye), the refresh rate (how many times the image updates per second, crucial for avoiding motion sickness), and the Field of View, or FOV (how much of your peripheral vision the virtual world fills). Higher numbers are generally better across the board. Then you have the lenses, which focus the light from the screens into your eyes. Newer “pancake” lenses allow for slimmer, lighter headsets compared to the older, bulkier Fresnel lenses.

Lila: And what about tracking? How does the headset know where I am in my room and where my hands are? Is that where our “bridge” technology comes in?

John: Precisely. This leads us directly to the second pillar. Most modern standalone headsets use what’s called inside-out tracking. They have several cameras on the outside that constantly scan the room, using computer vision to determine the headset’s position in 3D space. They also track the controllers, which are typically covered in infrared LEDs that only the headset’s cameras can see. But to truly merge the real and virtual worlds, an even more powerful technology is emerging: LiDAR.

Supply Details: The Hardware Powering the Portal

VR Hardware: Your Window to Another World

Lila: Before we jump fully into LiDAR, can we break down the hardware a bit more? It feels like there’s a whole computer inside these things. What are the guts of a standalone headset like the Quest 3?

John: Of course. Think of a standalone headset as a specialized smartphone you wear on your face. It has a System on a Chip (SoC), which is like the device’s brain, combining the main processor (CPU), graphics processor (GPU), and other components all in one. For example, the Quest 3 uses Qualcomm’s Snapdragon XR2 Gen 2 chip, which is specifically designed for the demands of VR and Mixed Reality (MR). It also has RAM (memory for running apps), internal storage (for games and experiences), and a battery. It’s a marvel of miniaturization.

Lila: And the controllers? They seem like more than just buttons. They let you “grab” things.

John: They are. Modern VR controllers, like the Meta Quest Touch Plus or the PSVR2 Sense controllers, are packed with technology. They have the standard buttons, triggers, and analog sticks, but they also feature haptic feedback (sophisticated vibrations that can simulate textures or impacts) and adaptive triggers (which can provide resistance, like when drawing a bowstring). They also have sensors like accelerometers and gyroscopes to track their orientation, complementing the camera-based tracking of their position.

Lila: So you have the headset creating the visual and auditory illusion, and the controllers providing the sense of touch and interaction. It’s a full sensory package. I’m starting to see why the hardware is so critical. It’s not just a monitor; it’s the entire vehicle for the experience.

John: That’s the perfect way to put it. And the quality of that vehicle determines how smooth, believable, and comfortable the journey is. A low refresh rate or poor tracking can quickly lead to nausea, breaking the immersion completely. That’s why companies are pouring billions into R&D to improve these core hardware components. They’re chasing what Michael Abrash, a leading VR researcher, calls “visual reality”—a virtual experience indistinguishable from the real world.

Technical Mechanism: Building Bridges with Light

LiDAR: The Unseen Architect of Virtual and Augmented Worlds

Lila: Okay, I’m ready for the “bridge” now. You mentioned LiDAR. I know the latest iPhones have it, and I’ve heard it’s used for self-driving cars, but how does it connect to the Metaverse?

John: LiDAR, which stands for Light Detection and Ranging, is a remote sensing method that uses pulsed laser light to measure distances with incredible precision. In essence, a LiDAR sensor sends out thousands of tiny, invisible laser beams every second. It then measures how long it takes for those beams to hit an object and bounce back. By calculating this “time of flight,” it can create a highly detailed, three-dimensional map of its surroundings. We call this map a point cloud.

Lila: So it’s like sonar, but with light? It paints a 3D picture of a room in real-time?

John: Exactly. Now, imagine this capability in a VR or MR headset. This is a game-changer for several reasons. For one, it provides instant and accurate room meshing. Instead of the cameras having to “figure out” where the walls, floor, and furniture are, the LiDAR sensor knows immediately. This makes setting up a safe play area effortless and robust.

Lila: That makes sense for safety, but what about building the Metaverse itself? The search results I saw mentioned “reality capture” and “3D model rendering.” Is that where LiDAR really shines?

John: That’s its most transformative application. With LiDAR, we can perform what’s known as reality capture. You can walk into a room, a building, or even an entire outdoor site, and a LiDAR-equipped device can scan it and generate a photorealistic, dimensionally accurate 3D model. Companies like RICK Engineering are already using this to revolutionize how they visualize construction sites. They can capture every aspect of a site and upload it to an interactive platform for clients.

Lila: So, you could scan your own living room and then redecorate it in VR? Or a museum could scan an entire exhibit, allowing people from all over the world to walk through a perfect digital replica? That’s incredible. It’s not just about creating fantasy worlds; it’s about creating a “digital twin” of our own world.

John: You’ve hit the nail on the head. This blurs the line between Augmented Reality (AR), which overlays digital information onto the real world, and Virtual Reality (VR). We call this spectrum Mixed Reality (MR) or Extended Reality (XR). A headset with good cameras and a LiDAR sensor, like the Apple Vision Pro, can seamlessly blend virtual objects with your real environment. It knows exactly where your coffee table is, so a virtual character can realistically jump onto it, or a virtual ball can bounce off your real wall.

Team & Community: The Architects and Inhabitants of Virtual Worlds

Lila: This brings us to the third pillar: the destinations. We have the hardware to get in and the LiDAR to connect our reality to the virtual. But where do we actually *go*? Who is building these virtual worlds?

John: The “who” isn’t a single entity. It’s a vast ecosystem of companies, independent developers, and, most importantly, the users themselves. On one end, you have massive, professionally developed platforms. Think of games that are becoming social hubs, like Fortnite or Roblox. On the other end, you have platforms specifically designed for social interaction and user-generated content, like VRChat and Rec Room.

Lila: I’ve heard a lot about VRChat. People create their own avatars and their own worlds, right? So the “team” is literally everyone who uses it?

John: In a very real sense, yes. VRChat provides the tools, the framework, and the servers, but the vast majority of the content—the millions of avatars and tens of thousands of unique worlds—is created and uploaded by its community. This is a fundamental aspect of the “open” Metaverse vision: it’s not a product you consume, but a place you co-create. These platforms foster vibrant communities, from art collectives and dance clubs to support groups and language exchange meetups, all happening in user-built virtual spaces.

Lila: So there are two parallel tracks: the polished, corporate-built experiences and the more chaotic, creative, user-driven worlds. Is there an in-between? What about the professional side?

John: Absolutely. There’s a rapidly growing enterprise segment. Companies are building virtual worlds for very specific purposes. Imagine a car manufacturer creating a virtual showroom where customers can explore and customize a new car model in 1:1 scale. Or a medical school with a virtual operating theater where students can practice complex procedures without risk. Platforms like NVIDIA’s Omniverse are designed for this kind of industrial collaboration, allowing engineers and designers from around the globe to work together on the same 3D model in a shared virtual space.

Use-Cases & Future Outlook: More Than Just Games

Lila: This is so much bigger than I thought. We’ve touched on gaming, social hangouts, and professional collaboration. What are some other use cases that are gaining traction right now?

John: The list is expanding daily. Here are some of the most impactful areas:

- Training and Simulation: This is a huge one. It’s safer and more cost-effective to train someone to operate heavy machinery, perform a delicate surgery, or handle a hazardous materials spill in a realistic virtual simulation. First responders can practice emergency scenarios in a controlled environment.

- Education: Imagine a history class where students can walk through ancient Rome, or a biology class that can shrink down to explore the inside of a human cell. Immersive learning leads to better retention and engagement.

- Health and Wellness: VR is being used for physical therapy, making repetitive exercises more engaging. It’s also used for mental health, providing exposure therapy for phobias or PTSD in a safe, controlled setting, and for guided meditation in serene virtual landscapes.

- Remote Work: Beyond simple video calls, platforms like Immersed allow you to have multiple virtual monitors in a shared space with your colleagues, creating a sense of presence and collaboration that’s missing from a Zoom grid.

–

Lila: So, looking ahead, what’s the dream? Are we really heading for something like the “Oasis” from *Ready Player One*—a single, massive, interconnected virtual reality?

John: That’s the long-term vision for many, but we’re still in the very early days. The “Oasis” implies interoperability—the ability to take your avatar, your digital items, and your identity from one virtual world to another seamlessly. Right now, the Metaverse is more like the early internet: a collection of walled gardens. Your Fortnite skin can’t be used in Rec Room, and your VRChat world isn’t accessible from a corporate platform. The future potential lies in breaking down those walls, likely through open standards and blockchain technology, to create a truly persistent and unified virtual layer on top of our reality.

Competitor Comparison: The Titans of XR

Lila: Who are the main players pushing this future forward? It sounds like a battle of tech giants.

John: It is. In the hardware space, the competition is fierce. You have:

- Meta (formerly Facebook): They are all-in, spending billions per year through their Reality Labs division. Their strategy is to dominate the consumer market with accessible, affordable standalone headsets like the Quest series. Their goal is to build the user base first.

- Apple: Apple entered the ring with the Vision Pro, a very high-end “spatial computer.” Their strategy is different. They’re targeting professionals, developers, and early adopters first, focusing on creating a premium, tightly integrated ecosystem that blends AR and VR. They aren’t trying to sell to everyone, at least not yet.

- Sony: Their focus is purely on gaming. The PSVR2 is an accessory for the PS5, designed to deliver high-end gaming experiences to their existing massive user base. They’re not trying to build a general-purpose Metaverse; they’re building the future of immersive play.

- Valve: The company behind the Steam platform, Valve, caters to the PC-VR enthusiast market with hardware like the Valve Index. They are known for pushing the technological boundaries in tracking and controller technology.

- Others: Then you have companies like HTC with their Vive line, Pico (owned by ByteDance, the parent company of TikTok), which is a major competitor to Meta outside the US, and Varjo, which creates ultra-high-resolution headsets for industrial and enterprise use cases.

Lila: So everyone has a different angle. Meta wants the masses, Apple wants the premium market, Sony wants the gamers, and Valve wants the PC enthusiasts. What about on the virtual world platform side? Is the competition just as divided?

John: It is, and it’s divided along similar lines. You have Epic Games (Fortnite) and Roblox, which are game-first platforms with massive, young user bases that are evolving into social spaces. You have VRChat and Rec Room, which are social-first platforms built on user creativity. And then you have the enterprise platforms like NVIDIA Omniverse and Microsoft Mesh, which are focused on professional productivity and collaboration. Each is competing for users’ time and attention within their specific niche.

Risks & Cautions: The Not-So-Virtual Downsides

Lila: This all sounds incredibly exciting, but it can’t all be perfect. What are the major hurdles and risks we need to be aware of? My first thought is just strapping a screen to your face for hours on end can’t be great for you.

John: That’s a valid and common concern. The industry is working hard on comfort, but there are definitely challenges. Motion sickness, or “cybersickness,” can occur when there’s a disconnect between what your eyes see (movement) and what your inner ear feels (staying still). Higher refresh rates and lower latency (the delay between your movement and the image updating) help, but some people are more susceptible than others. Beyond the physical, there are significant societal and ethical questions.

Lila: Like what? Data privacy?

John: Exactly. These devices have cameras pointing out and, increasingly, cameras pointing in, tracking your eye movement and facial expressions. Eye-tracking is fantastic for creating more realistic avatars and for a technique called foveated rendering (where the headset only renders what you’re directly looking at in high detail, saving processing power), but it also means the device knows exactly what you’re looking at and for how long. That data is incredibly valuable and personal. Who owns it? How is it used? These are critical questions we’re only beginning to grapple with.

Lila: And what about the communities themselves? Online spaces can already be toxic. Does that get amplified in VR?

John: It can. Harassment and bullying can feel much more personal and invasive when it’s happening via an avatar standing right in front of you in a 3D space. Moderation is a monumental challenge for platform owners. Then there’s the risk of escapism and addiction, and the potential for a “digital divide” where only those who can afford the high-end hardware get to participate fully in this next evolution of the internet. These are not trivial problems.

Expert Opinions / Analyses: The View from 30,000 Feet

Lila: So, with all these pros and cons, what’s the general consensus from industry analysts? Is the Metaverse hype bubble bursting, or is it just getting started?

John: The consensus is that the initial, frothy hype of 2021 and 2022 has subsided, and we’re now entering a more realistic, and frankly, more productive phase. The initial vision of a consumer-dominated, interconnected Metaverse is still a very long way off. Many experts believe the most significant short-to-medium term growth will be in the enterprise and industrial sectors. The return on investment for training an employee in VR or using reality capture for a construction project is immediate and measurable.

Lila: So the “boring” stuff is where the real innovation is happening right now?

John: In many ways, yes. The technology has to mature and the costs have to come down before we see widespread consumer adoption on the scale of the smartphone. The focus for many experts has also shifted slightly from pure VR to Mixed Reality (MR). Apple’s entry with the Vision Pro has cemented this. The ability to seamlessly blend the real and virtual is seen as the key to making these devices useful for everyday tasks, not just for dedicated gaming or social sessions. The killer app for XR might not be a game, but a productivity tool that fundamentally changes how we interact with digital information.

Latest News & Roadmap: What’s Happening Right Now

Lila: What are some of the recent headlines that fit into this picture? The Apify results you showed me mentioned some company news.

John: Right. A perfect example of the market maturing is the news from Varjo, a company that makes high-end enterprise headsets. They recently announced they are discontinuing their older XR-3 and VR-3 models. This isn’t a sign of failure; it’s a strategic shift to focus their resources on their next-generation hardware, like the XR-4. It shows the technology is moving fast, and companies are having to be nimble to keep up.

Lila: And the LiDAR collaborations?

John: We’re seeing exciting partnerships there as well. Companies like Lumotive are developing LiDAR sensors based on “liquid crystal metasurfaces,” which are solid-state with no moving parts. This makes them smaller, cheaper, and more reliable—perfect for integration into consumer devices like VR headsets. As this core technology improves and becomes more affordable, its adoption will skyrocket, further fueling the reality capture and MR experiences we’ve been discussing.

Lila: And of course, there are always new games and experiences on the horizon for platforms like the Quest 3 and PSVR2, which keeps the current user base engaged.

John: Constantly. The content pipeline is crucial. Without compelling games and experiences, the hardware is just an expensive paperweight. The steady stream of new software is what will continue to grow the player base and encourage people to keep buying new hardware.

FAQ: Your Questions Answered

Lila: This has been a lot to take in. Let’s do a quick-fire round. I’ll ask some basic questions that I think a beginner would have, and you can give me the concise, expert answer.

John: Sounds good. Fire away.

Lila: 1. Do I need a powerful computer to use VR?

John: Not anymore. Standalone headsets like the Meta Quest 3 have everything built-in. You only need a powerful PC for high-end PC-VR headsets, or a PlayStation 5 for the PSVR2.

Lila: 2. Will VR hurt my eyes?

John: There’s no scientific evidence that VR causes long-term eye damage. However, eye strain is possible, just like with any screen. It’s important to take regular breaks, ensure the headset’s lenses are properly aligned with your eyes (adjusting the IPD, or interpupillary distance), and stop if you feel any discomfort.

Lila: 3. What’s the difference between AR, VR, and MR?

John: Think of it as a spectrum. VR (Virtual Reality) is fully immersive and replaces your surroundings with a digital world. AR (Augmented Reality) overlays digital information onto your real-world view, usually through a phone screen or smart glasses. MR (Mixed Reality) is the most advanced, anchoring virtual objects into your real space so you can interact with them as if they were really there.

Lila: 4. Is the Metaverse just one big app or place?

John: No, not yet. Right now, the “Metaverse” is a concept describing a network of many different, disconnected virtual worlds, games, and platforms. The long-term goal is for these to become interconnected, but we’re not there yet.

Lila: 5. Is LiDAR necessary for a good VR experience?

John: It’s not necessary for a good *VR* experience, which can be entirely fantasy-based. However, it is becoming essential for a good *MR* (Mixed Reality) experience, as it allows for a much more accurate and seamless blending of the real and virtual worlds.

Related Links & Further Reading

John: For anyone who wants to dive even deeper, here are a few great resources to keep up with the industry:

- XR Today: For up-to-the-minute news on the business and technology of extended reality.

- Virtual Reality Pulse: A great content aggregator for VR professionals.

- UploadVR & Road to VR: Two of the longest-running and most respected news sites dedicated to the consumer VR industry.

- Your favorite hardware’s official blog (e.g., the Meta Quest Blog or PlayStation Blog) for software and feature updates.

Lila: Thanks, John. This has been incredibly illuminating. It feels less like a distant sci-fi concept now and more like a tangible, evolving technology with real-world applications today and massive potential for tomorrow. The gateway, the bridge, and the destinations—it all makes sense now.

John: My pleasure, Lila. It’s a complex topic, but breaking it down into its core components shows that it’s not magic; it’s the result of decades of innovation in computing, optics, and sensing technology coming together. The road ahead is long, but it’s undoubtedly one of the most exciting frontiers in tech today.

Disclaimer: The information provided in this article is for informational purposes only and does not constitute financial or investment advice. The XR market is highly volatile. Always conduct your own thorough research before making any investment decisions.