🚀Want hands-free AR? Amazon’s Alexa Fund is betting big on smart glasses & the metaverse. Learn how AR will transform our lives!#ARglasses #AlexaFund #Metaverse

“`

Explanation in video

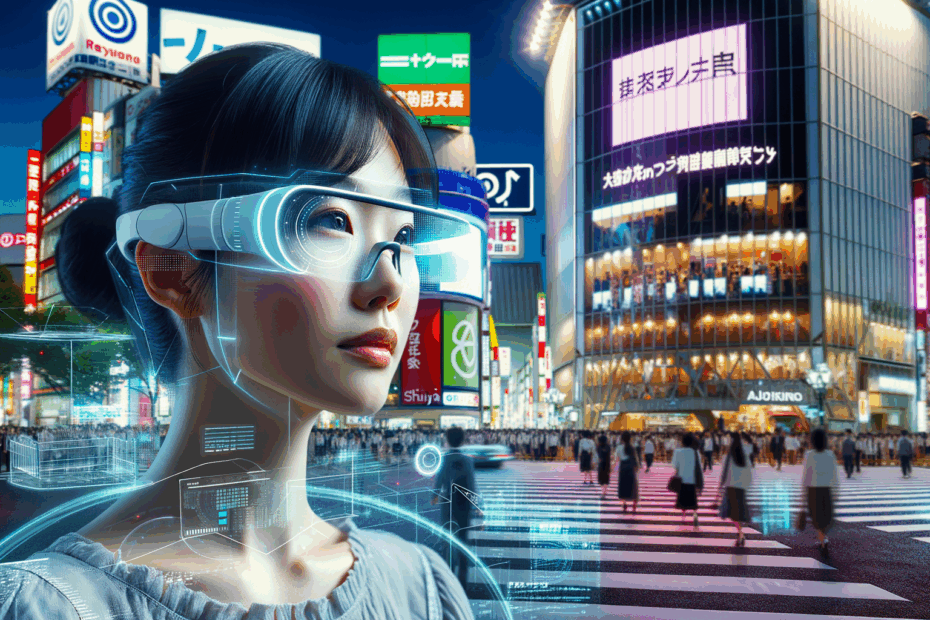

The Future Through a New Lens: Augmented Reality, Smart Glasses, and Amazon’s Alexa Fund

John: Welcome back to our Metaverse exploration, everyone. Today, we’re focusing on a particularly exciting intersection of technologies that are subtly, yet profoundly, shaping our digital and physical interactions: augmented reality (AR), the sleek new form factors of smart glasses, and the significant investments being made, particularly by giants like Amazon through its Alexa Fund. It’s a landscape buzzing with innovation, and it’s moving faster than many realize.

Lila: That sounds fascinating, John! I’ve heard bits and pieces, especially about Amazon’s interest in AR. For our readers who might be new to this, could you break down what we mean by “augmented reality” and “smart glasses”? Sometimes these terms get thrown around, and it’s easy to get lost.

John: An excellent starting point, Lila. Let’s clarify. Augmented Reality (AR) is a technology that overlays digital information – images, sounds, or other sensory stimuli – onto our real-world view. Think of it as enhancing your current perception of reality, rather than completely replacing it, which is what Virtual Reality (VR) does. So, you might see navigation arrows on the street in front of you, or information about a product when you look at it in a store, all through a device.

Lila: Okay, so it’s like adding a digital layer to the world I already see. And how do “smart glasses” fit into that picture? Are they just fancy spectacles?

John: In essence, yes, but with a lot more under the hood. Smart glasses are wearable computer-enabled eyeglasses. Early versions were a bit clunky, but they’re rapidly evolving into more stylish and functional forms. They can provide displays for AR, integrate cameras, microphones, speakers, and connect to the internet or your smartphone. The goal is to offer hands-free access to information and digital interactions. Amazon’s Echo Frames are an early example, though they focus more on audio and Alexa integration without a visual AR display. However, the industry, and indeed Amazon, is pushing towards true AR capabilities in glasses.

Basic Info: Demystifying AR, Smart Glasses, and the Alexa Fund

Lila: That makes sense. So, AR is the “what” – the digital overlay – and smart glasses are increasingly the “how” – the device we use to experience it. You mentioned the Alexa Fund. What exactly is that, and why is Amazon so invested in this particular area of tech?

John: The Alexa Fund is Amazon’s venture capital arm, specifically dedicated to investing in companies that are innovating in areas like voice technology, artificial intelligence (AI), and, crucially for our discussion, ambient computing and augmented reality. They provide funding to startups and developers to fuel the growth of voice-powered experiences and related hardware. Amazon sees a future where Alexa isn’t just in smart speakers, but integrated everywhere, providing seamless assistance. Smart glasses with AR are a natural extension of this vision.

Lila: So, they’re essentially funding the ecosystem around their core AI, Alexa? That’s a smart move. It’s not just about selling Echo Dots anymore.

John: Precisely. It’s about creating a pervasive, helpful AI presence. Imagine smart glasses that not only show you information but also allow you to interact with Alexa hands-free, in a much more contextual way. For instance, you could be looking at a landmark, and Alexa, through your AR glasses, could provide information about it, or translate a sign in real-time. This is where their interest in companies developing advanced AR smart glasses becomes very clear.

Lila: That paints a clearer picture. It’s less about a single gadget and more about an interconnected experience. Are these AR smart glasses widely available now, or are we still in the early adopter phase?

John: We’re in a fascinating transitional phase. There are some AR smart glasses on the market, primarily aimed at enterprise or specific niche uses. For example, Vuzix makes AR glasses for industrial applications – think logistics, manufacturing, and field service. Meta has its Ray-Ban Stories, which are more “smart” than full AR but inch closer. Google has been experimenting for years, from Google Glass to newer prototypes. And Apple is widely rumored to be developing its own advanced AR/VR headset and eventually glasses. The truly mainstream, consumer-friendly AR smart glasses that seamlessly blend style and powerful AR are still on the horizon, but development is accelerating rapidly, partly thanks to investments like those from the Alexa Fund.

Supply Details: Who’s Building the AR Future, and How is Alexa Fund Fueling It?

Lila: You mentioned Amazon is investing in other companies through the Alexa Fund. Can you give us some examples, especially in the AR smart glasses space? I saw some headlines about a company called IXI.

John: Yes, IXI is a perfect example and very topical. IXI, a Helsinki-based startup, has been a significant recipient of funding that includes participation from the Alexa Fund. They recently raised a substantial amount, around $36.5 million, with the Alexa Fund being a notable investor. This wasn’t their first funding round involving Amazon’s venture arm either. IXI is particularly interesting because they are working on what they describe as “autofocus eyewear” – smart glasses that can dynamically adjust their focus, potentially revolutionizing vision correction and, by extension, how AR displays are integrated for clarity.

Lila: Autofocus eyewear? That sounds like a game-changer, not just for AR but for anyone who wears corrective lenses! So, Amazon, through the Alexa Fund, is backing this fundamental optical technology?

John: Exactly. It shows a deep strategic interest. If AR glasses are to become mainstream, they need to be comfortable and effective for a wide range of users, including those with vision impairments. Solving the optics is a huge hurdle. By investing in companies like IXI, Amazon is helping to build the foundational technology that could underpin future generations of AR smart glasses, potentially even their own or those deeply integrated with the Alexa ecosystem.

Lila: So, it’s not just about the software and AI, but also the core hardware components. Are there other notable players receiving this kind of backing or developing similar tech that Amazon might be interested in?

John: While IXI is a prominent recent example linked to the Alexa Fund, the AR space is quite active. Companies like Vuzix, as I mentioned, are established in the enterprise AR market and are constantly innovating. They’ve recently focused on expanding their capabilities, even setting up a new facility in Silicon Valley to bolster innovation. Then you have component manufacturers working on micro-displays, waveguides (the optics that project images onto your eyes), sensors, and low-power processors. The Alexa Fund’s strategy seems to be to identify and support key enablers across this spectrum, from core technology like IXI’s autofocus system to potentially software and application developers down the line.

Lila: It sounds like a very strategic, long-term play. They’re not just waiting for the market to mature; they’re actively shaping it. Does Amazon develop its own AR glasses too, or is it purely through these investments?

John: Amazon has its Lab126, the hardware R&D division behind Kindle, Echo, and Fire devices. While they haven’t released a full-fledged AR display-based pair of glasses to the mass market yet – their Echo Frames are audio-focused – it’s widely believed they are working on more advanced AR hardware. The information from the Apify results suggests Amazon is exploring AR glasses to assist their delivery drivers with routes and on-foot navigation. This is a very practical, internal use case that could serve as a testing ground and driver for their AR development. These investments via the Alexa Fund can be seen as complementary to their internal efforts, giving them access to cutting-edge external innovation and potentially acquisition targets.

Technical Mechanism: How Do AR Smart Glasses and Alexa Work Together?

Lila: Okay, let’s get a bit more into the weeds. How do these AR smart glasses actually work? What’s the tech inside that makes these digital overlays possible, and how would something like Alexa integrate seamlessly?

John: It’s a complex interplay of several key technologies. At a high level, AR smart glasses typically include:

- Displays: These are crucial. They project the digital information into the wearer’s field of view. Technologies vary, from waveguide displays that use a tiny projector and optical elements to direct light to the eye, to simpler birdbath optics or even retinal projection. The goal is a bright, clear image that appears naturally integrated with the real world.

- Sensors: A suite of sensors is needed to understand the environment and the user’s orientation. This includes cameras (for seeing the world and sometimes for eye-tracking), accelerometers and gyroscopes (for motion and head tracking), and potentially depth sensors (like LiDAR, for 3D mapping of the surroundings).

- Processors: All this sensor data and the AR rendering require significant computational power. This can be on-board the glasses (requiring powerful but very low-power chips) or offloaded to a connected smartphone or even the cloud, though on-device processing is preferred for lower latency (delay).

- Connectivity: Wi-Fi and Bluetooth are standard for connecting to the internet, smartphones, or other devices. 5G could play a bigger role in the future for high-bandwidth, low-latency cloud AR experiences.

- Input/Output: This includes microphones for voice commands (crucial for Alexa), small speakers or bone conduction transducers for audio, and sometimes touchpads or even gesture recognition.

Lila: That’s a lot packed into something that’s supposed to look like regular glasses! So, if I’m wearing a pair of these hypothetical Alexa-enabled AR glasses, and I ask, “Alexa, what’s that building?”, what happens technically?

John: Great question. Here’s a simplified breakdown:

- Voice Capture: The microphones in the smart glasses pick up your command, “Alexa, what’s that building?”

- Wake Word & Processing: On-device processing (or sometimes cloud-assisted) recognizes the “Alexa” wake word and processes your query. The audio data of your question is typically sent to Amazon’s cloud servers for Natural Language Understanding (NLU).

- Contextual Awareness (The AR Magic): This is where AR adds significant value. The glasses’ camera and sensors are constantly analyzing your field of view. The system would identify the building you’re likely looking at based on your head orientation, gaze (if eye-tracking is available), and possibly even image recognition of the building itself. GPS data would also help narrow down the location.

- Information Retrieval: Alexa, now understanding both your question and the contextual information (the specific building), queries relevant databases (e.g., mapping services, local business information, historical archives).

- AR Display & Audio Feedback: The retrieved information is then presented back to you. Alexa might audibly tell you about the building through the glasses’ speakers. Simultaneously, the AR display could overlay text with the building’s name, historical facts, or even highlight architectural features directly onto your view of the building.

This entire process needs to happen in near real-time to feel natural and useful.

Lila: Wow, that “Contextual Awareness” part is key. It’s not just Alexa answering a question; it’s Alexa *seeing* what I see and understanding my environment. That sounds incredibly powerful, but also computationally intensive.

John: It is. And that’s why advancements in low-power processing, efficient AI algorithms (especially for computer vision and SLAM – Simultaneous Localization and Mapping, which helps the glasses understand where they are in 3D space), and display technology are so critical. Companies like IXI focusing on autofocus also contribute, because a clear, adaptable display is fundamental for presenting that AR information effectively, regardless of the user’s eyesight.

Team & Community: The People and Ecosystems Behind AR Smart Glasses

Lila: It’s clear there’s a lot of sophisticated R&D involved. What can you tell us about the teams and communities driving this forward? Are these secretive internal labs, or is there a broader ecosystem emerging, especially with initiatives like the Alexa Fund?

John: It’s a mix, Lila. You have the large tech companies like Amazon (Lab126), Meta (Reality Labs), Google (various AR projects), and Apple (their secretive development groups), which have significant internal teams composed of engineers, designers, researchers, and product managers. These are often quite well-funded but can also be, as you say, somewhat secretive about their long-term roadmaps.

Lila: So, the big players are definitely in the game. But what about the startups, like IXI? What kind of teams do they have?

John: Startups like IXI often bring together highly specialized talent. IXI, for instance, based in Helsinki, benefits from Finland’s strong engineering and mobile technology heritage. Their team likely includes experts in optics, materials science, microelectronics, and software development specific to vision technologies. The fact that they’ve attracted investment from the Alexa Fund and other prominent tech investors suggests a high caliber of expertise and a compelling technological vision.

Lila: And how does something like the Alexa Fund foster a community? Is it just about the money, or is there more to it?</p

John: The Alexa Fund, and similar venture arms, do more than just write checks. They often provide access to resources, mentorship, and technical expertise from within Amazon. For companies developing Alexa-integrated hardware or skills, this can be invaluable. It can also create a network effect, where portfolio companies might collaborate or learn from each other. Amazon also runs programs and provides SDKs (Software Development Kits) to encourage third-party developers to build Alexa skills and integrate Alexa into their products. This helps create a broader developer community around the Alexa ecosystem, which indirectly supports the hardware like smart glasses by ensuring there are useful applications and services available for them.

Lila: So, it’s about building an ecosystem, not just a product. Are there open-source communities contributing to AR and smart glasses development as well?

John: Yes, though perhaps not as visibly for the complete end-product hardware. However, many underlying technologies benefit from open-source contributions. For example, there are open-source computer vision libraries (like OpenCV), 3D graphics engines, and AI frameworks that developers in the AR space utilize. Some companies might also open-source parts of their software platforms to encourage wider adoption and community development. It’s a hybrid model: the core hardware and some proprietary software are often kept close, but the broader software and application layer can be more open.

Lila: That makes sense. A strong developer community would be crucial for making smart glasses truly useful. If there are no apps or experiences, the hardware won’t take off, no matter how good it is.

John: Exactly. It’s the classic chicken-and-egg scenario. You need compelling hardware to attract developers, and you need compelling applications to attract users to the hardware. Initiatives like the Alexa Fund aim to help bootstrap both sides of that equation, especially for technologies that align with Amazon’s strategic interests in voice, AI, and ambient computing.

Use-Cases & Future Outlook: Beyond Navigation and Notifications

Lila: We’ve touched on some use cases, like Amazon using AR glasses for delivery drivers. What are some other current and, more excitingly, future applications for AR smart glasses, especially with Alexa integration?

John: The potential applications are vast and span across consumer, enterprise, and specialized professional domains. Let’s break some down:

- Enterprise & Industrial:

- Logistics and Warehousing: As seen with Amazon’s potential use, AR can provide hands-free navigation, order picking instructions, and inventory management.

- Manufacturing and Assembly: Overlaying instructions, schematics, or quality control checklists directly onto a worker’s view of a component.

- Field Service and Maintenance: Remote expert assistance, where a technician can share their view with an off-site expert who can then guide them with AR annotations.

- Healthcare: Surgeons could see vital patient data overlaid in their field of view, or even 3D anatomical models during procedures. Training and medical education also benefit.

- Consumer Applications:

- Navigation: Beyond car GPS, think real-time walking directions overlaid on streets, or finding your way through a crowded airport.

- Information Overlay: Looking at a restaurant and seeing reviews, a menu, or booking availability. Translating foreign language signs in real-time.

- Communication: Enhanced video calls where it feels more like the person is in the room with you, or sharing your POV with AR annotations.

- Gaming and Entertainment: Immersive AR games that interact with your real-world environment. Imagine Pokémon Go, but far more integrated.

- Shopping: Visualizing furniture in your home before buying it, or “trying on” clothes virtually.

- Accessibility: For individuals with hearing impairments, Vuzix is supplying custom AR glasses for captioning solutions. IXI’s autofocus could help those with vision issues see digital overlays clearly.

Lila: Those enterprise uses sound incredibly practical and can offer immediate ROI. And the consumer ones… real-time translation through my glasses? That’s like something out of science fiction! How does Alexa enhance these?

John: Alexa integration makes these experiences hands-free and more intuitive. Instead of fumbling with a phone or a tiny touchpad on the glasses, you can just ask.

- “Alexa, show me the assembly instructions for this part.”

- “Alexa, call Sarah and share my view.”

- “Alexa, what’s the rating for this restaurant?”

- “Alexa, translate this sign.”

- “Alexa, guide me to the nearest coffee shop.”

Voice becomes the primary interface, which is crucial for a device that’s meant to be worn and used while you’re engaged with the real world.

Lila: The future outlook seems incredibly bright then. Are we talking about a 5-year, 10-year horizon for widespread adoption of these advanced AR glasses?

John: It’s always tricky to put precise timelines on technological adoption. However, the pace of development is accelerating. We’re seeing more investment, more sophisticated prototypes, and clearer use cases. I’d say within the next 3-5 years, we’ll see significantly more capable and consumer-friendly AR smart glasses emerge, especially from the major tech players. Widespread, ubiquitous adoption, where they’re as common as smartphones, is likely further out, perhaps in the 7-10 year range. But key vertical markets, like enterprise and specialized professional fields, will see significant adoption much sooner. The investments from entities like the Alexa Fund are definitely helping to shorten these timelines by fueling the necessary innovation in both hardware and software.

Lila: And I suppose as the technology improves, the glasses will become less obtrusive, more stylish, and have longer battery life – all key factors for mass adoption.

John: Absolutely. Those are critical engineering challenges: miniaturization, power efficiency, display brightness and field of view, and social acceptability of the design. The Apify results mentioning IXI’s focus on “eyewear” and “autofocus glasses” highlights this drive towards making the technology not just functional but also comfortable and adaptable to individual user needs, which is paramount for everyday use.

Competitor Comparison: How Does Amazon’s Approach Stack Up?

Lila: We’ve talked a lot about Amazon and the Alexa Fund. How does their strategy in the AR smart glasses space compare to what other big tech companies like Meta, Google, or Apple are doing?

John: That’s a great question, as each major player seems to have a slightly different angle, though with a shared goal of making AR a significant computing platform.

- Amazon: Their approach, heavily emphasized by the Alexa Fund’s activities and their internal developments for logistics, seems very pragmatic and ecosystem-focused. They are building on the strength of Alexa, aiming for ambient intelligence where AR glasses become another key touchpoint for their AI. The investment in foundational tech like IXI’s autofocus glasses suggests a long-term vision for high-quality, accessible AR. Their initial forays (Echo Frames) were audio-first, indicating a gradual path to visual AR, possibly prioritizing utility and voice interaction.

- Meta (Facebook): Meta is all-in on the Metaverse, and they see AR glasses as a crucial component of that vision, alongside VR. Their Ray-Ban Stories are a step towards normalizing face-worn tech, though they lack true AR displays. Their high-end Project Cambria (now Meta Quest Pro) and future true AR glasses (like the “Orion” prototype mentioned in one of the Apify results) aim for much deeper immersion and social presence. Their strategy is heavily tied to building out their vision of the Metaverse as a social and commercial platform.

- Google: Google has a long history with AR, starting with Google Glass. While Glass faced initial consumer backlash, it found a niche in enterprise. They’ve continued R&D, with projects like Google Lens (AR on smartphones) and more recent AR glasses prototypes. The Apify results mention “Google’s unnamed XR glasses” with display and AI connectivity, suggesting they’re still actively pursuing this. Their strength lies in search, mapping, AI, and Android, all of which are natural fits for AR. They seem to be taking a more cautious, research-driven approach to consumer AR glasses after the initial Glass experience.

- Apple: Apple is the quiet giant in this space. While they haven’t released a product yet, they are widely rumored to be developing both a high-end mixed-reality (MR) headset and, eventually, sleeker AR glasses. Apple’s strategy typically involves entering a market when they feel they can deliver a highly polished, integrated hardware and software experience. Their strengths in chip design (M-series, A-series chips), ecosystem control (iOS, App Store), and design prowess make them a formidable future competitor. They’ve been steadily building AR capabilities into iOS with ARKit.

- Microsoft: With HoloLens, Microsoft has been a leader in enterprise AR for years. Their focus has been primarily on productivity, industrial, and military applications. While HoloLens is a powerful but bulky headset, the learnings are invaluable for future, more compact devices.

- Vuzix: As highlighted in the Apify results, Vuzix is a more specialized player, focusing on enterprise AR smart glasses and waveguides. They are a good example of a company building specific solutions for industry, often partnering with others or supplying core technology.

Lila: So, Amazon’s angle with Alexa and potentially leveraging their massive logistics and e-commerce operations seems quite distinct. They’re not necessarily trying to build the most immersive social Metaverse like Meta, or a pure productivity tool like Microsoft’s current HoloLens focus.

John: Precisely. Amazon’s approach seems to be about enhancing everyday interactions and tasks through ambient, voice-driven AI, with AR as a visual component. Their investment in IXI’s autofocusing eyewear, as mentioned in multiple Apify snippets, reinforces this practical, user-centric approach: making AR accessible and visually comfortable is a priority. This could give them an edge in everyday usability if they can successfully integrate Alexa in a truly helpful, non-intrusive way through well-designed smart glasses.

Lila: It sounds like the “killer app” for Amazon’s AR glasses might be Alexa itself, supercharged with visual context. Whereas for Meta, it might be social presence, and for Apple, perhaps a seamless extension of their existing ecosystem.

John: That’s a very astute way to put it, Lila. The core strengths and strategic goals of each company are shaping their approach to this next wave of personal computing.

Risks & Cautions: The Hurdles on the Road to AR Ubiquity

Lila: This all sounds incredibly promising, John, but what are the potential downsides or challenges? It can’t all be smooth sailing. I can already think of privacy concerns with glasses that are always “seeing.”

John: You’ve hit on a major one right away, Lila. The risks and cautions are significant and need careful consideration by developers, manufacturers, and society as a whole.

- Privacy: This is paramount. Smart glasses with always-on cameras and microphones raise legitimate concerns about surveillance, data collection, and unauthorized recording. How will user data be protected? What about the privacy of people around the wearer? Clear regulations, transparent policies, and strong security measures are essential. Meta’s renewed work on facial recognition, as one Apify result mentions, will undoubtedly reignite these debates.

- Data Security: These devices will handle a vast amount of personal and environmental data. Protecting this data from breaches and misuse is a massive challenge.

- Social Acceptance: The “glasshole” phenomenon with early Google Glass showed that public perception and social etiquette are major hurdles. Devices need to be discreet, and there need to be clear indicators if they are recording. Style and aesthetics also play a huge role.

- Technological Hurdles:

- Battery Life: Packing all that tech into a small form factor and having it last all day is incredibly difficult.

- Field of View (FoV): Many current AR displays offer a limited FoV, feeling like a small screen floating in front of you rather than a truly immersive overlay.

- Brightness and Outdoor Readability: Displays need to be bright enough to be seen clearly in various lighting conditions, especially outdoors.

- Heat Dissipation: Powerful processors generate heat, which can be uncomfortable in a wearable device on your head.

- User Interface (UI) / User Experience (UX): Designing intuitive and non-distracting interfaces for AR is still an evolving art. Voice control like Alexa helps, but visual UIs need to be carefully crafted.

- Cost: Early advanced AR devices are expensive. Bringing the cost down to mass-market levels will take time and economies of scale.

- Content Ecosystem: As we discussed, without compelling apps and experiences, the hardware won’t thrive. Building this ecosystem takes time and investment.

- Health and Safety: Concerns about eye strain, distraction while performing tasks like driving or operating machinery, and the long-term effects of wearing such devices need to be addressed.

- Digital Divide: Ensuring equitable access to these technologies so they don’t exacerbate existing inequalities is another societal consideration.

Lila: That’s a pretty daunting list. The privacy aspect, in particular, seems like a make-or-break issue for widespread trust and adoption. How are companies like Amazon addressing these, especially with Alexa being central to their strategy?

John: Companies are increasingly aware that they need to address these proactively. For Alexa, Amazon has implemented features like microphone mute buttons on Echo devices and options to review and delete voice recordings. Similar principles would need to apply, and be even more robust, for AR glasses. This could include clear visual indicators when cameras are active, on-device processing where possible to limit data sent to the cloud, and strong data encryption. The focus on “glanceable info” and voice control, as seen in some early smart glasses concepts (like those mentioned in an Instagram-related Apify result for Alexa glasses without cameras), might be an interim step to build comfort before full AR vision features become commonplace.

Lila: So, an incremental approach might be key to building public trust. It’s not just about technological feasibility but also societal readiness.

John: Precisely. It’s a socio-technical challenge. The companies that navigate these ethical and practical considerations most effectively will likely be the ones that succeed in the long run. The Alexa Fund investing in a company like IXI, which is working on fundamental vision-correcting eyewear, also hints at a focus on user well-being and accessibility, which is a positive sign.

Expert Opinions / Analyses: What the Pundits Are Saying

Lila: John, with your experience, you’ve seen tech trends come and go. What’s the general sentiment among industry analysts and experts regarding AR smart glasses, particularly with Amazon’s push via the Alexa Fund?

John: The general consensus among analysts is one of cautious optimism, with a strong belief in the long-term transformative potential of AR. Most see AR as the next major computing platform after mobile. The Apify results we’ve been discussing reflect this: outlets like XR Today, Virtual Reality Pulse, and others are actively covering Amazon’s investments and the broader AR smart glasses landscape, signaling its perceived importance.

Experts point to several key indicators:

- Sustained Investment: The fact that major players like Amazon (through the Alexa Fund), Meta, Google, and Apple are pouring billions into R&D and acquisitions is a strong sign of commitment. The $36.5 million funding for IXI, with Amazon’s participation, is a case in point – it’s serious money for a specialized technology.

- Enterprise Adoption as a Proving Ground: The success of AR in enterprise settings (logistics, manufacturing, healthcare) is demonstrating tangible value and helping to mature the technology and supply chains. This often paves the way for consumer adoption. Amazon’s reported use of AR glasses for delivery drivers is a prime example of this internal validation.

- Convergence of Enabling Technologies: Advancements in AI (especially computer vision and natural language processing like Alexa), 5G connectivity, miniaturized sensors, and display technologies are all reaching a point where compelling AR experiences are becoming feasible in smaller form factors.

- Focus on User Experience: There’s a growing understanding that AR glasses need to be more than just tech showcases. They need to be comfortable, stylish, intuitive, and solve real problems or offer significant benefits. The emphasis on “autofocus glasses” by IXI or stylish designs in general reflects this.

Lila: So, the experts are generally bullish, but what are their main reservations or areas of focus when they analyze this space?

John: Their reservations often mirror the risks we just discussed: privacy, social acceptance, and the sheer technical challenge of creating a device that is powerful, affordable, and wearable all day. Analysts are closely watching for:

- The “Killer App” or Use Case: While enterprise has clear ROIs, the consumer “killer app” for AR glasses is still being sought. Is it enhanced navigation, ubiquitous information access via Alexa, immersive gaming, or something else entirely?

- Platform Wars: Will AR be dominated by a few large ecosystems, similar to smartphones (iOS vs. Android)? How open or closed will these platforms be?

- Pace of Innovation vs. Hype: There’s always a risk of hype outpacing reality. Analysts look for genuine technological breakthroughs and sustainable business models rather than just buzz.

The commentary around Amazon’s Alexa Fund investments tends to be positive, as it signals a commitment to fostering an ecosystem and tackling fundamental challenges, like IXI’s work on vision correction for AR. It’s seen as a pragmatic, long-term strategy.

Lila: And what’s your personal take, John, as a veteran tech journalist? Do you think this time, AR glasses will finally break into the mainstream?

John: I believe they will, Lila, but it will be an evolution, not an overnight revolution. We’ve learned a lot from past attempts. The current focus on practical utility, voice interfaces like Alexa, and solving core wearability issues (like IXI’s autofocus) suggests a more mature approach. The integration with existing, successful ecosystems – like Alexa for Amazon – provides a strong foundation. It won’t be a single device launch that changes everything, but rather a gradual infusion of AR capabilities into our digital lives, with smart glasses becoming an increasingly important interface. The journey that “Amazon continues,” as one headline put it, is one of persistent, strategic building. It’s a marathon, not a sprint.

Latest News & Roadmap: Amazon’s AR Journey and the Alexa Fund’s Role

Lila: Let’s bring it right up to the present. Based on the recent news, especially from the Apify search results, what’s the latest on Amazon’s AR smart glasses journey and the roadmap, particularly concerning the Alexa Fund?

John: The latest news, as highlighted in several of those Apify results from late April and early May 2025, really underscores Amazon’s continued and deepening commitment to AR, largely propelled by the Alexa Fund.

Key recent developments include:

- Significant Funding for IXI: Multiple sources (XRToday, EWIntelligence, Virtual Reality Pulse) confirm that Amazon’s Alexa Fund participated in a $36.5 million funding round for IXI, the Helsinki-based developer of AR smart glasses, specifically focusing on autofocus technology. This is a major signal of Amazon’s interest in solving fundamental optical challenges for AR eyewear. The capital is intended to help IXI launch its autofocus eyewear and potentially target distribution through eye care professional (ECP) channels.

- Amazon’s Internal AR Applications: XRToday explicitly mentions Amazon’s work on AR glasses to assist their delivery drivers with routes and on-foot navigation to customer delivery points. This points to a practical, internal application that can drive development and provide real-world testing. “Amazon isn’t just delivering packages — it’s delivering the future of AR,” as one tweet aptly put it.

- Alexa Fund’s Broader Strategy: The Alexa Fund’s involvement is consistently framed as strategic, aiming to foster the next generation of AR smart glasses and drive innovation in the broader XR (Extended Reality) landscape. This isn’t just about one company; it’s about building an ecosystem.

- Vuzix Expansion: While not directly an Amazon initiative, XRToday also reported on Vuzix, another player in the AR smart glasses market, bolstering its innovation capabilities with a new Silicon Valley facility. This indicates broader momentum in the AR hardware space.

Lila: So, the roadmap seems to be:

- Fund and support foundational technologies (like IXI’s autofocus).

- Develop and test practical applications internally (like for delivery drivers).

- Continue to build out the Alexa ecosystem to be ready for these new AR endpoints.

Is that a fair summary of Amazon’s approach?

John: I think that’s an excellent summary, Lila. It’s a multi-pronged strategy. The roadmap isn’t just about a single product launch; it’s about cultivating the underlying technology, identifying compelling use cases (starting with internal ones that have clear benefits), and ensuring their core AI, Alexa, is ready to be the intelligent assistant powering these future experiences. The articles emphasize Amazon’s “journey” in AR, which implies a sustained, long-term effort rather than a short-term project.

Lila: What about timelines? Do these recent developments give us any clues about when we might see more advanced AR glasses from Amazon or its partners?

John: The news doesn’t give explicit product launch timelines for Amazon’s own potential consumer AR glasses. However, the significant funding for a company like IXI, aimed at helping them “launch” their technology, suggests that some of these advanced optical systems could start appearing in products within the next couple of years, perhaps initially in specialized eyewear or integrated by various manufacturers. Amazon’s own consumer offerings would likely follow once the technology is mature enough to meet their standards for user experience and integration with Alexa. The delivery driver AR glasses, if successful internally, could also accelerate development by providing invaluable real-world data and feedback.

Lila: So, we should keep an eye on companies like IXI and developments from the Alexa Fund as leading indicators of what’s coming next. It’s an exciting space to watch!

John: Absolutely. The activities of the Alexa Fund are a very good barometer of Amazon’s strategic direction in voice, AI, and increasingly, AR. They are actively shaping the building blocks of this future.

FAQ: Your Questions Answered

Lila: John, this has been incredibly insightful. I bet our readers have a few quick questions. Maybe we can tackle some common ones?

John: Great idea, Lila. Let’s do a quick FAQ section.

Lila: Okay, first up: What’s the main difference between Augmented Reality (AR) and Virtual Reality (VR)?

John: The core difference is your perception of the real world. Augmented Reality (AR) overlays digital information onto your existing real-world view – you still see the world around you, but with added digital elements. Think of Pokémon Go or navigation arrows on your glasses. Virtual Reality (VR), on the other hand, completely replaces your real-world view with a simulated, digital environment. You wear a headset that blocks out your surroundings and immerses you in a different world.

Lila: Next: Are all smart glasses AR glasses?

John: Not necessarily. The term “smart glasses” is quite broad. Some smart glasses, like early versions of Amazon’s Echo Frames, primarily offer audio features, notifications, and voice assistant access (like Alexa) without a visual display that overlays information onto your view. True AR glasses specifically include a display system to project those digital overlays, enabling augmented reality experiences. So, all AR glasses are smart glasses, but not all smart glasses are AR glasses.

Lila: Good distinction! How about this: Why is Amazon, through the Alexa Fund, investing so much in AR smart glasses companies like IXI?

John: Amazon sees AR smart glasses as a key future platform for their AI assistant, Alexa, and for ambient computing in general. By investing in companies like IXI, which is developing crucial technology like autofocus lenses, the Alexa Fund helps:

- Advance core technology: Making AR glasses more functional, comfortable, and accessible.

- Foster an ecosystem: Encouraging the development of hardware and software that can integrate with Alexa.

- Gain strategic insights: Staying at the forefront of AR innovation and potentially identifying future partners or acquisition targets.

- Expand Alexa’s reach: Moving Alexa beyond smart speakers and into truly wearable, context-aware devices.

Essentially, they are helping to build the future hardware that will run their future software and services.

Lila: That makes sense. And, What are some of the biggest challenges for AR smart glasses adoption?

John: The main challenges include:

- Technology: Battery life, display quality (brightness, field of view), processing power, and miniaturization.

- Cost: Making them affordable for the average consumer.

- Social Acceptance & Privacy: Overcoming concerns about aesthetics and the implications of wearable cameras/microphones.

- Content & Use Cases: Developing compelling applications that make them indispensable.

Lila: One more: When can we expect to buy truly capable AR smart glasses that look like normal glasses?

John: It’s an ongoing process. We’re seeing more stylish and capable devices emerge, but the “looks exactly like normal glasses but with full AR” ideal is still a few years away for the mass market. Progress is rapid, though. Companies like IXI are working on the optical components to make this more feasible. I’d estimate we’ll see significant improvements and more consumer-ready options in the next 3-5 years, with wider adoption following as the technology matures and prices come down.

Related Links and Further Reading

John: For those looking to dive deeper, we recommend keeping an eye on tech news sites that cover XR and wearable technology. Specifically, publications that have been tracking Amazon’s AR developments and the Alexa Fund’s investments would be a good start.

Lila: And searching for news about companies like IXI, Vuzix, and of course, Amazon’s own announcements regarding Alexa and hardware will provide ongoing updates.

Disclaimer: This article is for informational purposes only and should not be considered investment advice. The tech landscape is rapidly evolving. Always do your own research (DYOR) before making any investment decisions.

“`